A series of Zoom experiments that observes the effects of AI-driven tools on human conversations. Is it possible to achieve genuine connection in video calls? Is the machine bringing us closer or driving us apart?

“Report why we keep talking.”

Video Series, 2022

Carrie Sijia Wang

Made in partnership with the New York Public Library for the Performing Arts and made possible by The Ford Foundation.

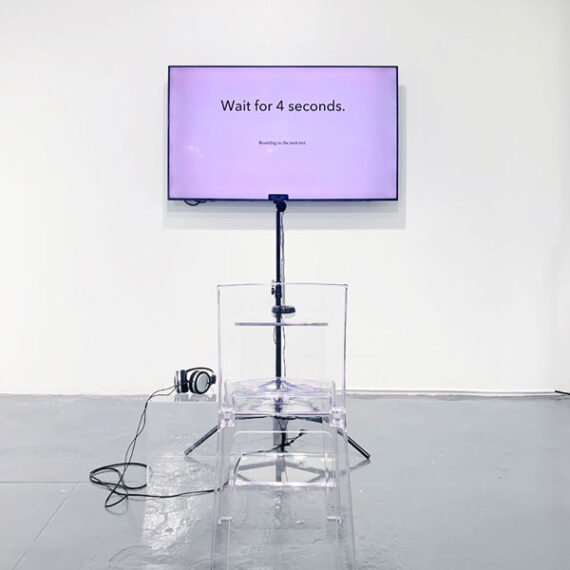

Art & Code 8 at Public Works Administration, curated by Celine Wong Katzman of Rhizome

Art & Code 8 Online Exhibition, website by Yehwan Song

In the videos: Cam, MARIO GUZMAN, theodora alden rivendale, Tong Wu, Zhuoyun (Yun) Chen

In a series of 15-minute Zoom conversations with friends, acquaintances, and strangers, I conducted two experiments to observe the effects of AI-driven tools on human conversations.

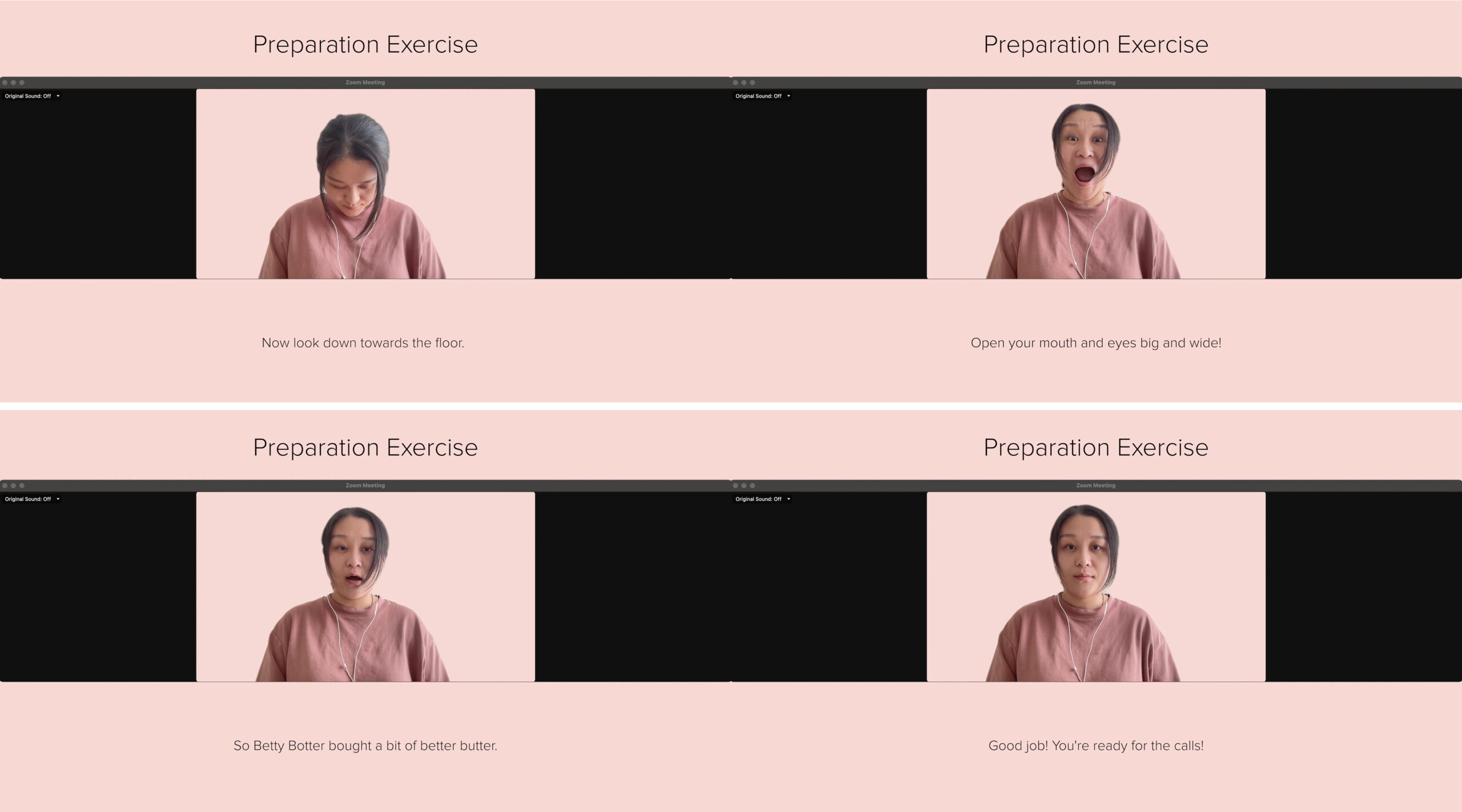

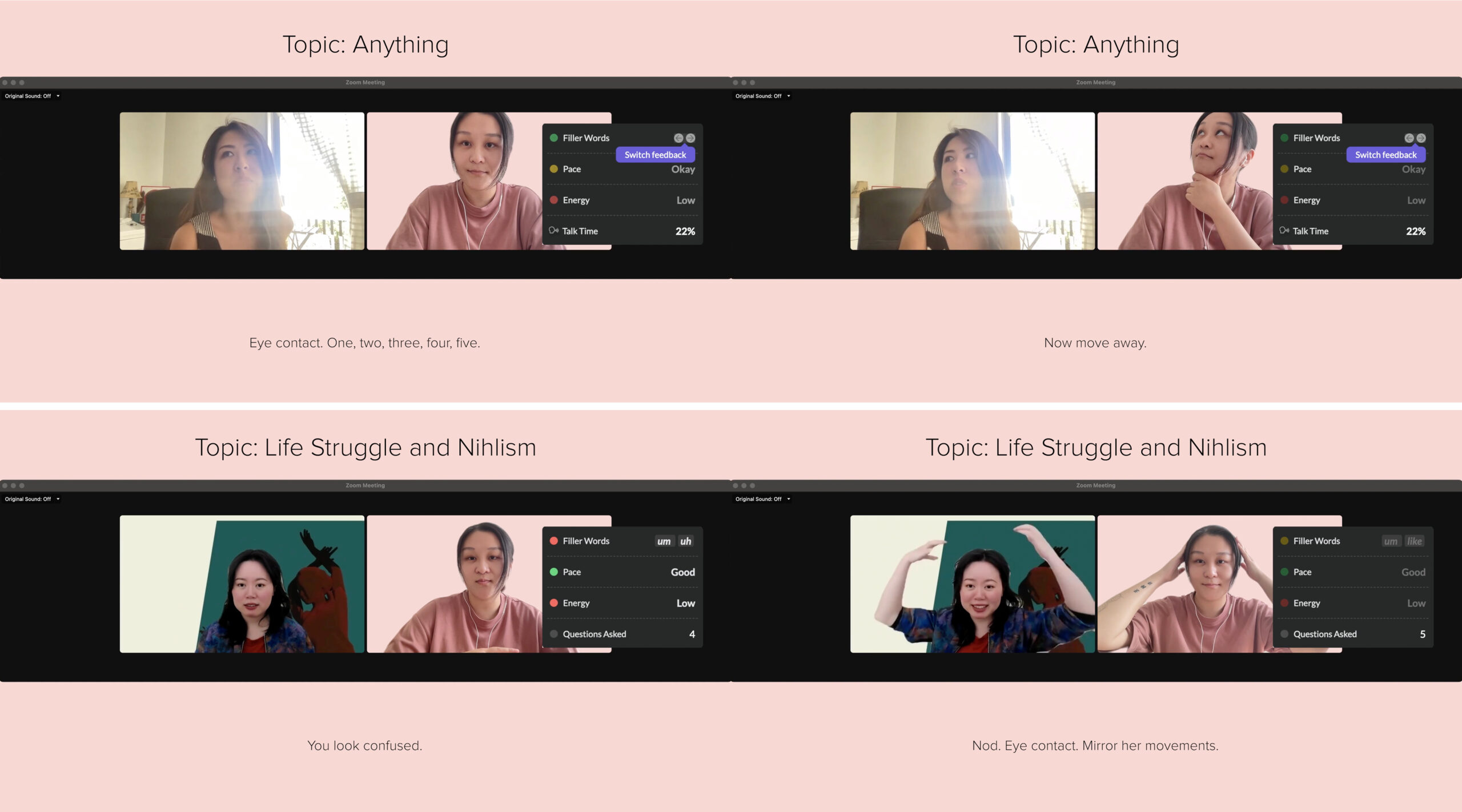

Experiment 01: Becoming a Better Speaker

An off-the-shelf application was used to provide live feedback on how well I spoke during the calls—whether my energy level is adequately high, my tone of voice positive, my use of “filler words” kept to a minimum, etc.. Based on the feedback, I would adjust my behavior to the best of my ability.

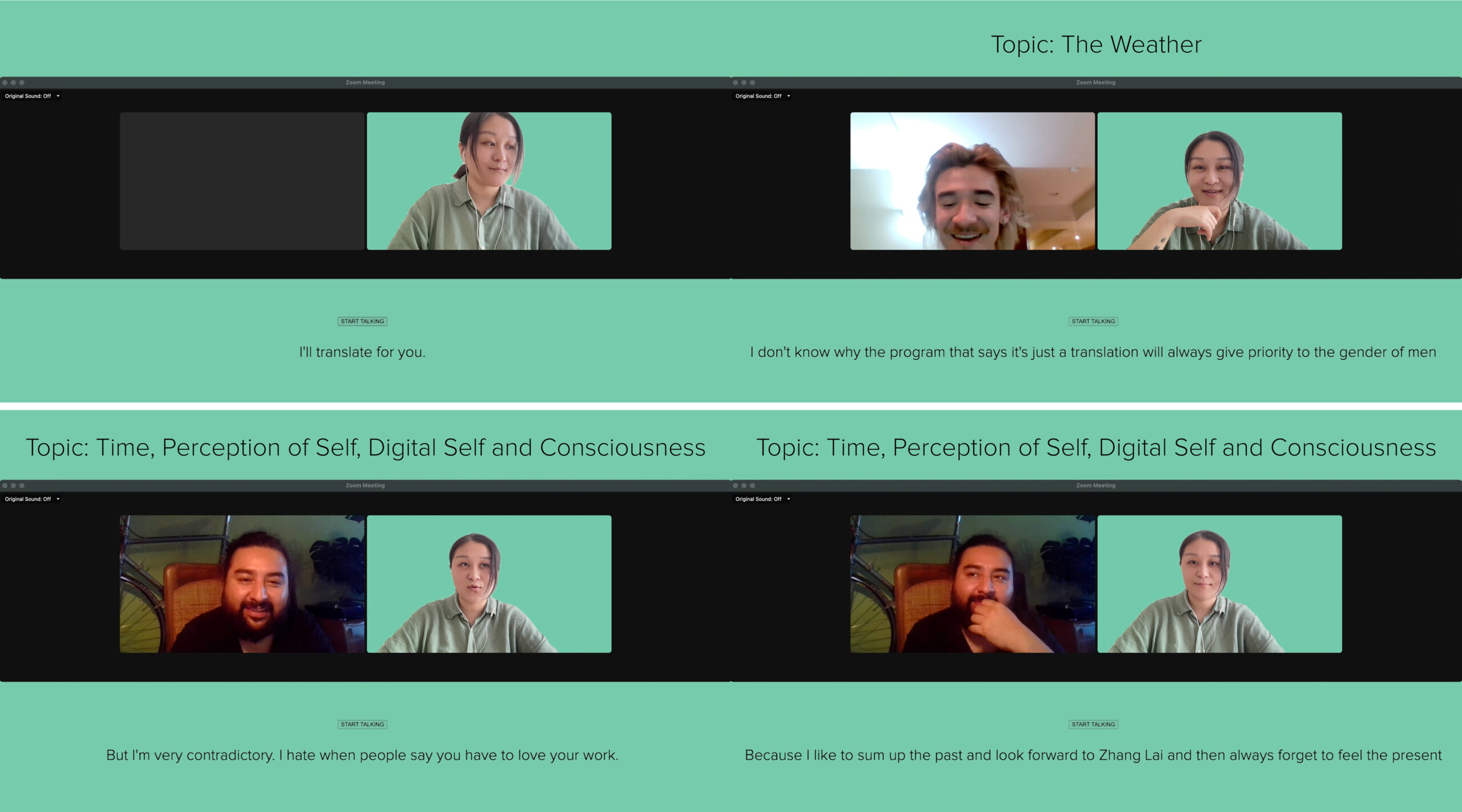

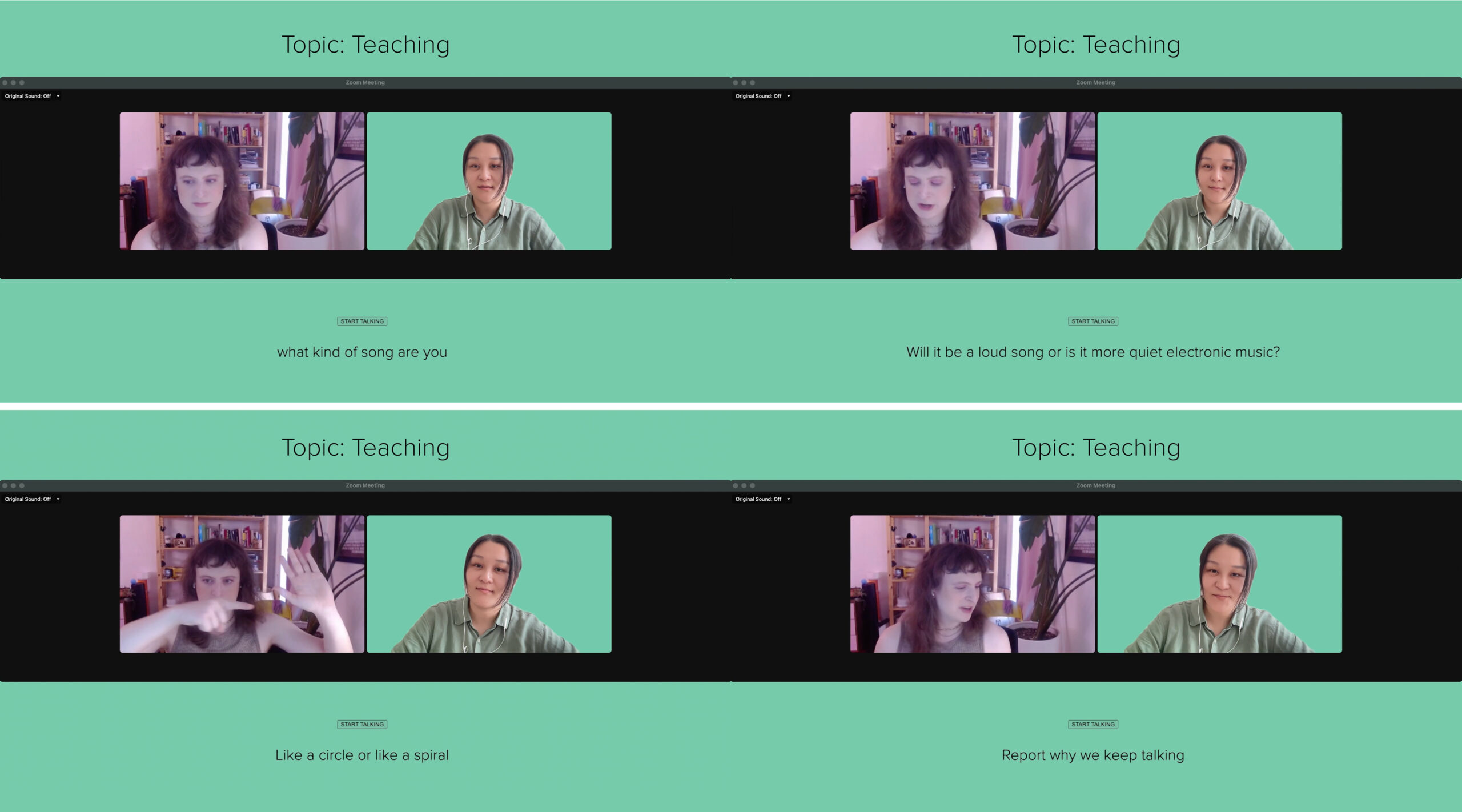

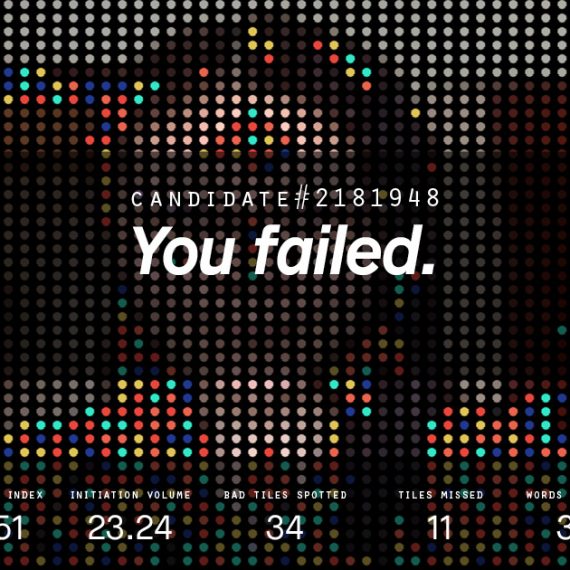

Experiment 02: Overcoming the Language Barrier

Connecting Speech Recognition API to Google Translate, I made a customized computer program that live-translates Chinese to English. During the calls, I spoke my first language while my visitor could only hear the machine translated English.

Results:

In the first experiment, I found myself constantly trying to please the algorithm—fixing my posture, speaking in higher frequency to appear more “energetic” for the machine, sometimes even deliberately censoring myself to sound more uplifting. Though my Zoom visitors seemed to be generally happy with how the conversations went, I felt distracted and dishonest during the calls.

In the second experiment, the existing biases in the translation program resulted in mistranslations. Speech Recognition API, because of the database it is trained on, may be better at recognizing some accents than others. Another notable instance is that in Chinese, there is no difference between the pronunciation of “he, she, or it,” they’re all pronounced as “ta.” Google Translate translates “ta” into “he” by default, no matter what the context is.

The mistranslations, frustrating at times, also had an upside. They brought the conversations to unexpected places, where I was able to learn more about my visitors from unplanned perspectives. And however strange it may be, I felt safe and comfortable hiding behind the technological mask, talking freely in my first language without worrying about how I sound.